Having the right data is the cornerstone of all digital marketing and eCommerce activities. This is why you need to check your data periodically and before you start any important project or engagement.

Data and decision making

We all use data in our day-to-day decision making.

We use data from our senses to navigate through the world. Data from our eyes and ears goes to our brain to be processed.

We trust the data that we receive – otherwise we wouldn’t be able to walk, talk or go to the bank. In fact, we are conditioned to trust the information that is provided to us.

We have similar trust in the data that is provided to us in a business context – and that can lead to problems sometimes.

We trust the numbers that we see on reports, the tools that we use and the people we work with.

Moreover, we trust this information without understanding how the data is collected and processed, and without all the relevant context.

Therefore, so we can trust the data we see we have to audit our Web Analytics data.

The cost of mistakes

The cost of making bad decisions based on poor data can be huge.

In fact, the estimated cost of bad data in the US is $3.1 trillion per year.

You can see for yourselves the cost of mistakes that were the results of bad or incomplete data:

NASA Mars Orbiter – Mission failure and financial loss

In 1999, NASA lost the Mars Climate Orbiter after being years in the planning and 9 months into space. A conflict between imperial and metric measurements caused the spacecraft to lose course and break in Mars atmosphere—a loss of $327,600,000.

Data insight: This is a system integration and data processing issue – two critical systems were using different measurement units, without a proper conversion.

New Coke Launch – Partial data is worse than no data?

In 1985, Coca Cola decided to replace the classic Coke recipe with a new formula. Coca Cola invested heavily in the campaign, but the new drink proved to be very unpopular – causing declining sales and up to 8,000 angry customer calls a day.

Coca Cola back-tracked and returned to original formula after 79 days.

Data Insight: Coca Cola did thorough market research about the taste of the new formula, but didn’t take into account other factors in the customers’ purchasing decision. So they had accurate but partial data – data presented not within the wider context.

What does bad data look like in digital marketing?

I’m going to look at 3 examples—one from each of the main areas of the customer journey: Acquisition, Engagement and Conversion.

1. Loss of Traffic Source

Measuring Acquisition: (Lesson in good-practice – why you should check your tracking before you start a campaign)

You launch a big campaign, using Paid Advertising, Social, and Email to send people to a state-of-the-art landing page, designed and built by a highly rated agency.

But in the process you are redirecting people to a different page on your site, and the redirect does not carry over the traffic source the user arrived from.

Those people will be tagged as ‘Direct’ traffic, and in some cases will start a new visit to the site. As a result, none of the conversions, sales etc. can be attributed to any particular Marketing Channel.

You will not be able to accurately calculate ROI or ROAS (return on ad spend) or check how well the landing page is working for each marketing channel.

Data insight: This is mainly a process issue – as part of the preparations for the campaign launch the Web Analytics tracking should have been checked. The technical challenge is minimal.

Similar issues: Loss of traffic source can happen in other scenarios, for example when using a social login (‘sign in with Facebook’), or when using a third party payment gateway (e.g. PayPal).

To fix: A Web Analytics audit should include a review of good practice and tagging processes as well as checking the quality of data and implementation.

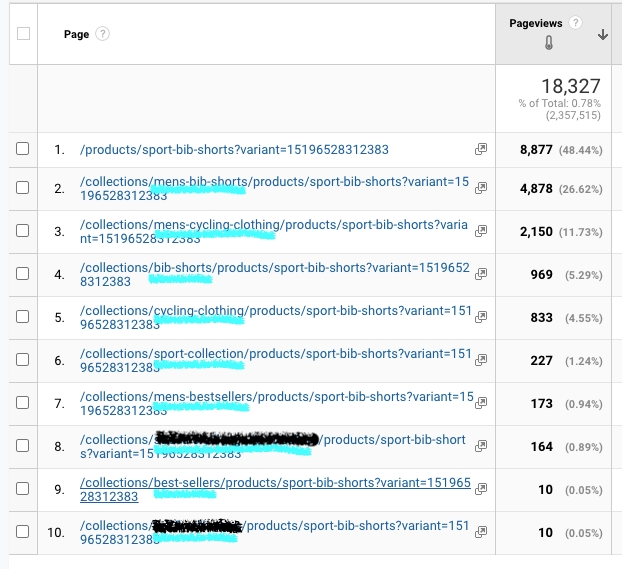

2. Page Names Variations – Measuring Engagement

Many e-commerce platforms (e.g. Shopify) will ‘give’ product pages a different page url and therefore page name, based on the route the visitor took to get to this page.

One of our clients had 33 tracked versions of the same product page:

In another case, only 55% of the visitors landing on a homepage were captured in Google Analytics without page name variations – the other 45% were split across 2,029 homepage variations:

| Page | Visits |

|---|---|

| / | 55% |

| /?result=success | 7.4% |

| /?result=success&token=123 | 2.2% |

| /fts=0 | 0.9% |

| /?dm_t=0,0,0,0,0 | 0.3% |

The impact of having page name ‘inflation’ can be serious for conversion rate optimisation (CRO).

It causes a huge headache when trying to do any navigational or behavioural analysis, for example to find out where do visitors go to from the homepage.

Data insights: There are two issues here:

- Implementation / data collection – the page name is captured ‘as is’ on every page url. No overriding naming convention was applied.

- Analytics setup issue – Google Analytics was not configured to remove irrelevant bits from the page name.

Similar issues: With product names, it is critical to capture the name excluding size and colour variations – if the product name changes in the checkout process you won’t be able to report and improve the full purchase journey.

To fix: Apply a robust naming convention to page tracking, using the datalayer. Additionally, check your data regularly, so you can spot and manage changes before they become an serious issue.

3. Conversion – Measuring Intent vs. Success.

(A lesson in tracking expertise, a single source of truth and conflict between two companies)

We were asked by a client to reconcile the difference between the apparent success of a Facebook Ads campaign, as claimed by their agency, and the lack of such success evident in Google Analytics.

The purpose of the campaign was to acquire new users, and the main conversion target was the creation of new accounts.

The agency claimed XX% Conversion Rate for the campaign, but the client saw much lower results in Google Analytics and their back-end platform (actual accounts created).

After investigation we discovered the following:

- The conversion success metric that was set by the agency on Facebook Ads was people reaching the account setup page.

The success metric captured in Google Analytics and that mattered to the client was actual accounts created. - In addition, the client assigned a monetary value to accounts created according to the type of account. The agency was not able to set up a dynamic value for conversion as the value is set AFTER reaching the account setup page.

Data insights: Technically, this is an Analytics setup issue – the same interaction should have been selected as a conversion success. However, it touches on a lot of good-practice process and communication between the client and the agency. After all, marketing agencies have a vested interest in showing good results.

Similar issues: Google Ads, email, CRM, and other analytics platforms can all track and report on performance. Each system is set up implemented and maintained separately, so there are many pitfalls to be wary of.

To fix: An important part of a Web Analytics audit is to check that data from all your digital eco-system platforms are congruent. This includes checking that the systems are integrated correctly—both on a technical level and on process / practice level.

A data review before the campaign started and a regular review of the data would have revealed the issue before it became a relationship problem between the companies.

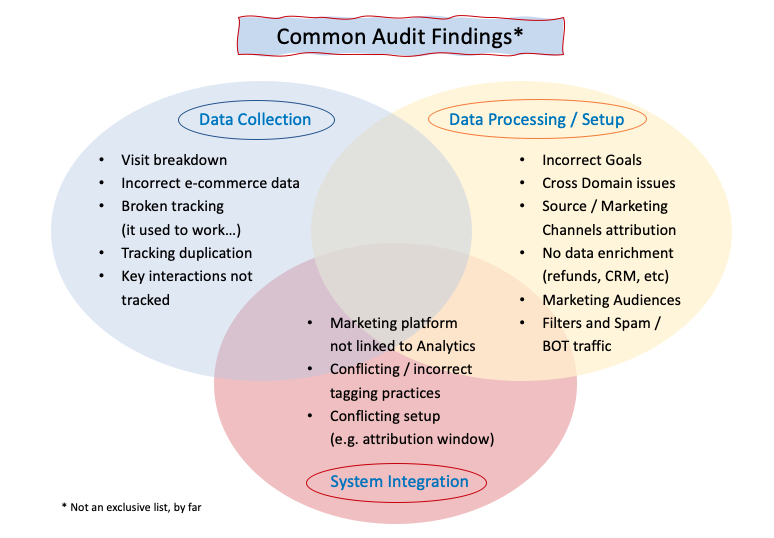

Of course, there are many more possible data issues that a web analytics audit can reveal:

And last few words on data audit

I hope we can all agree that Data Audits are essential but for a Data Audit to be successful and effective it has to be comprehensive and thorough.

To achieve that you’ll have to:

Include all relevant platforms and data sets used by the business

This includes access to platforms and the teams that use them. To do that you’ll have to engage all sides of the business, from tech to operations and marketing.

Look at data in the context of how it is collected, processed and mainly how it is used by the business.

For example – it might be important for a business to collect Net Revenue (excluding tax and shipping) as opposed to Revenue (inclusive of all). Revenue might be collected and processed perfectly, but in this case not useful to the client.

Look at data in the context of users’ behaviour.

The data we use represents users’ behaviour, but may be misleading if we don’t know the behavioural context. So as well as using technology to audit your data it’s really important to put yourself in the shoes of your customer. Practically, it means you have to emulate your customer’s journey and interactions to understand what the data you collect means. For example – the data says Social channel converts very well, but when you emulate your customer’s journey you see that your registration form is so bad people prefer to register with their Google account, giving ‘Social’ the credit for traffic that originally arrived from a different source.

Impartiality

Make sure the Data Audit is performed by an impartial party, ideally new to your setup. Use fresh eyes to get a thorough investigation and a fair evaluation of the state of your data.